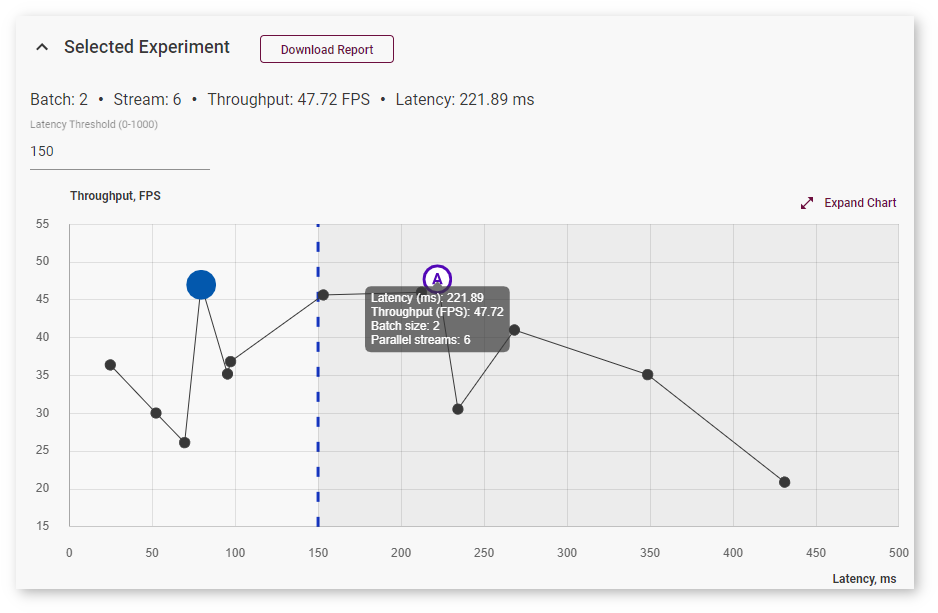

How To Set Batch Size In Workbench

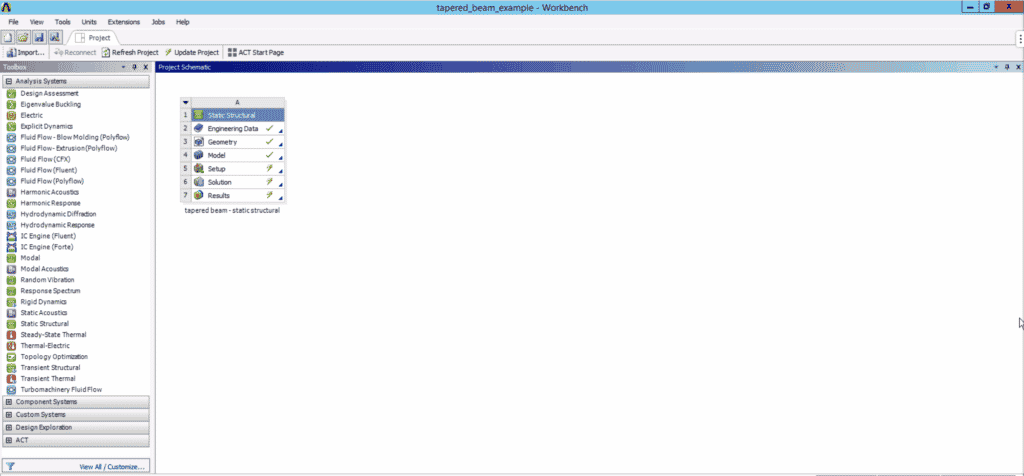

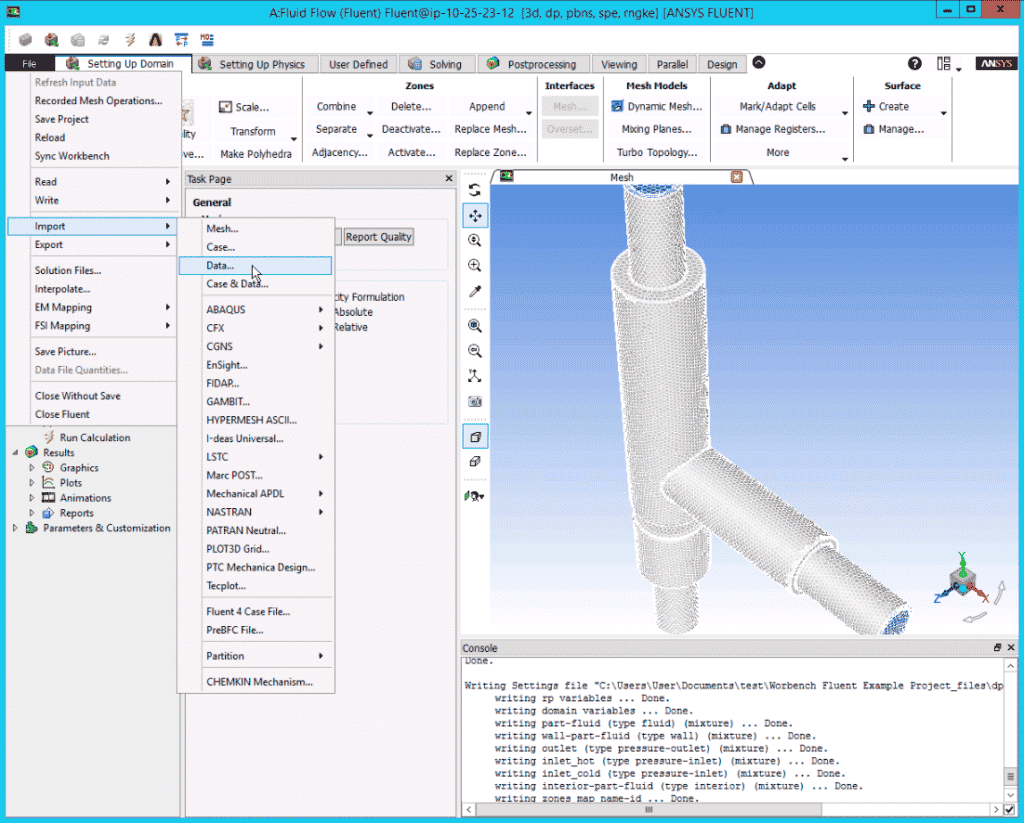

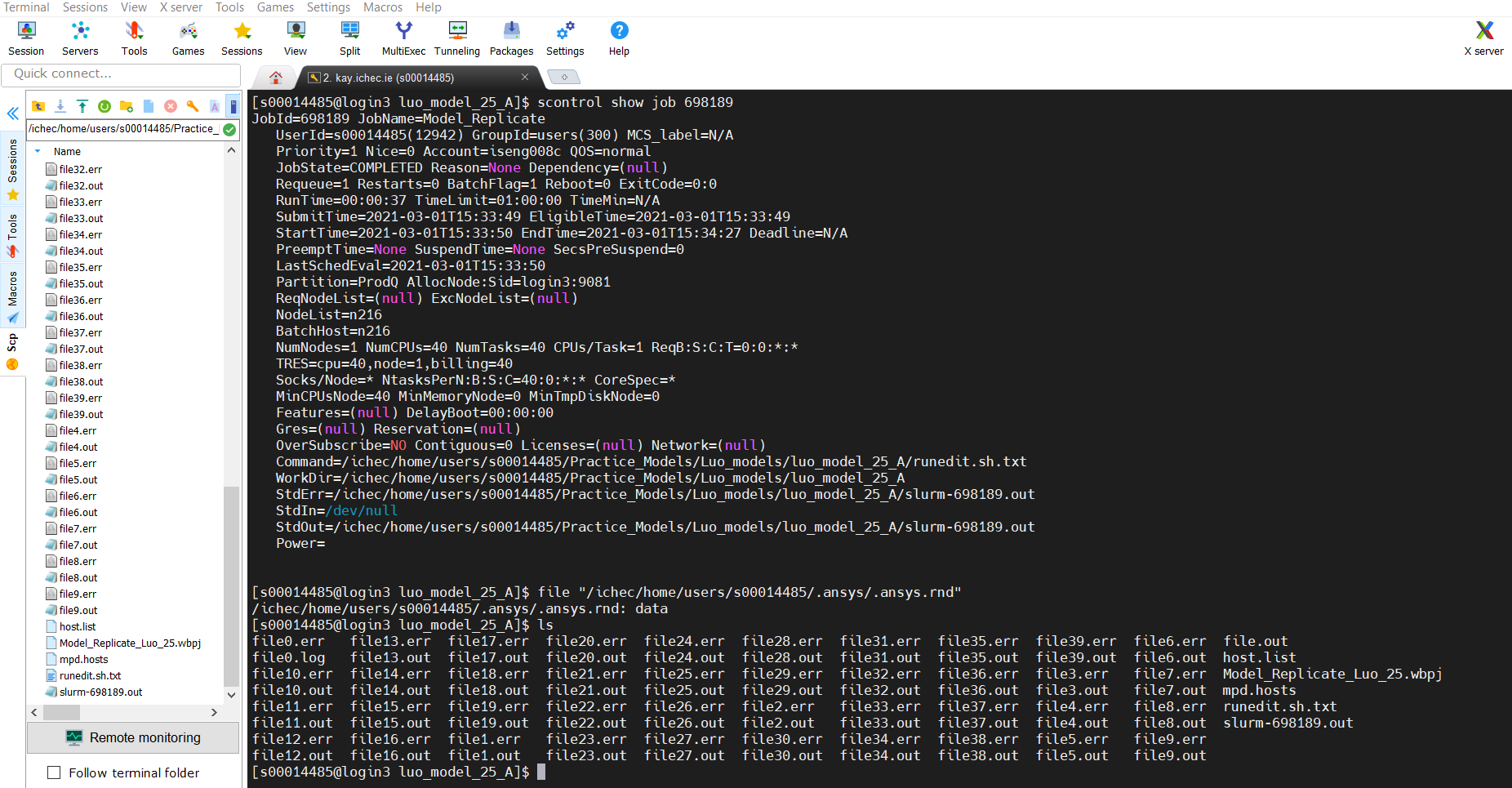

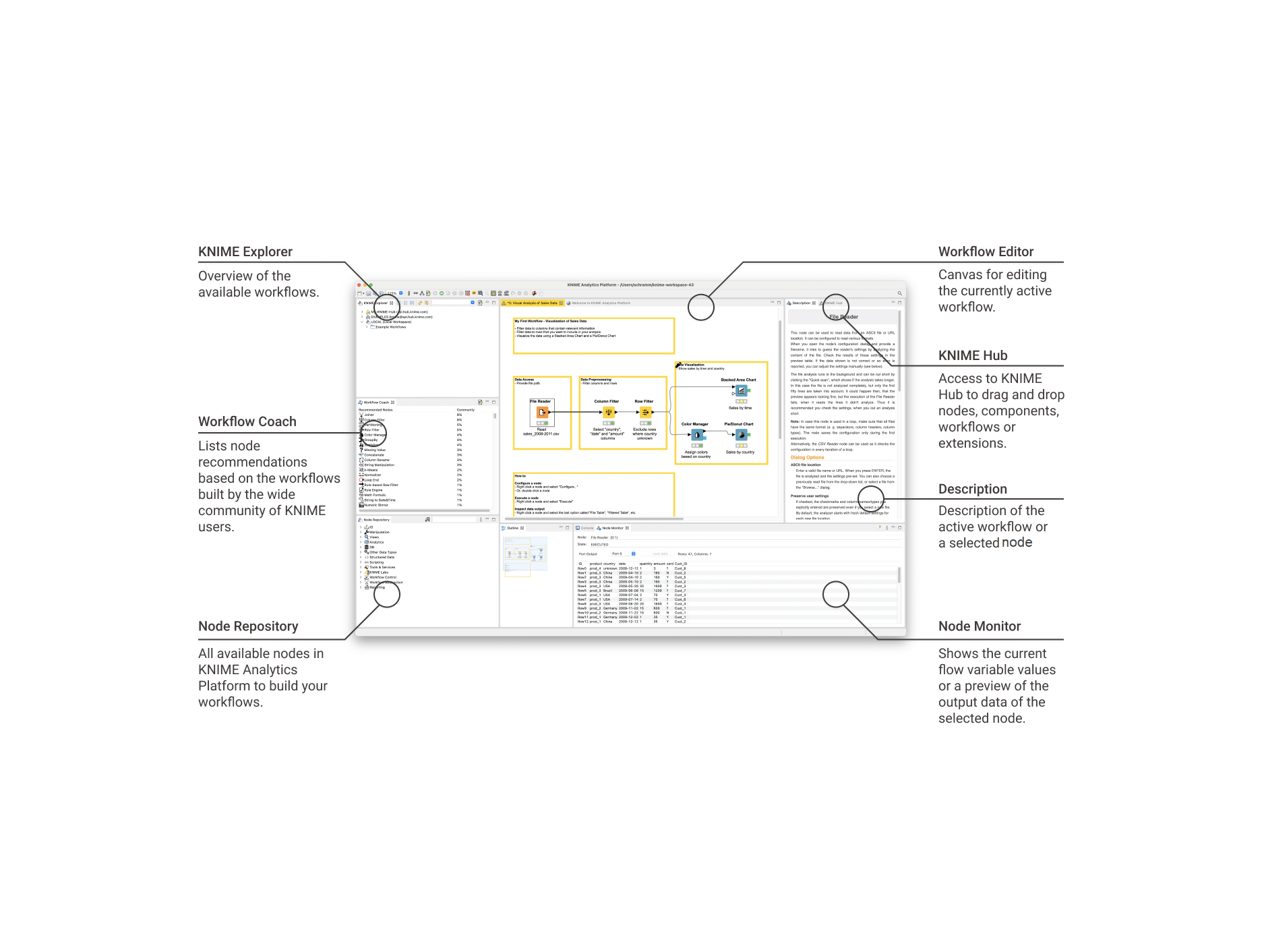

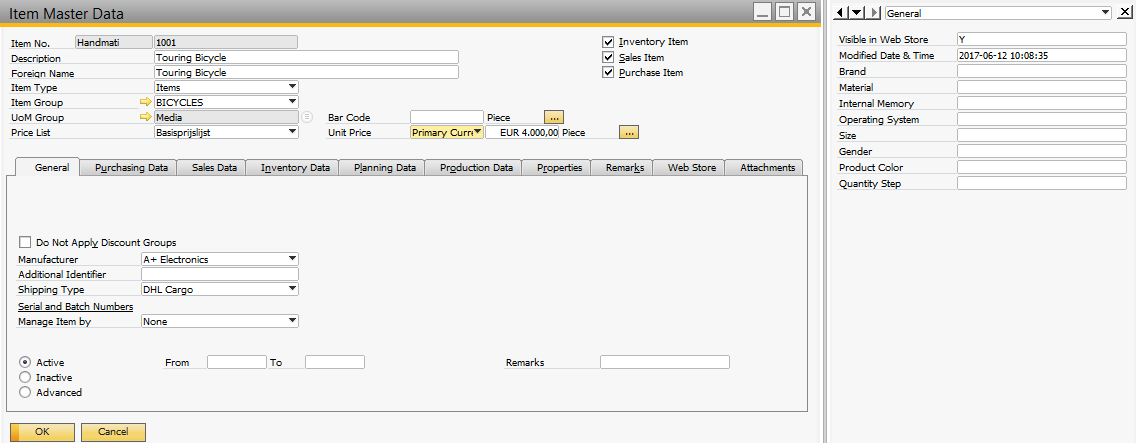

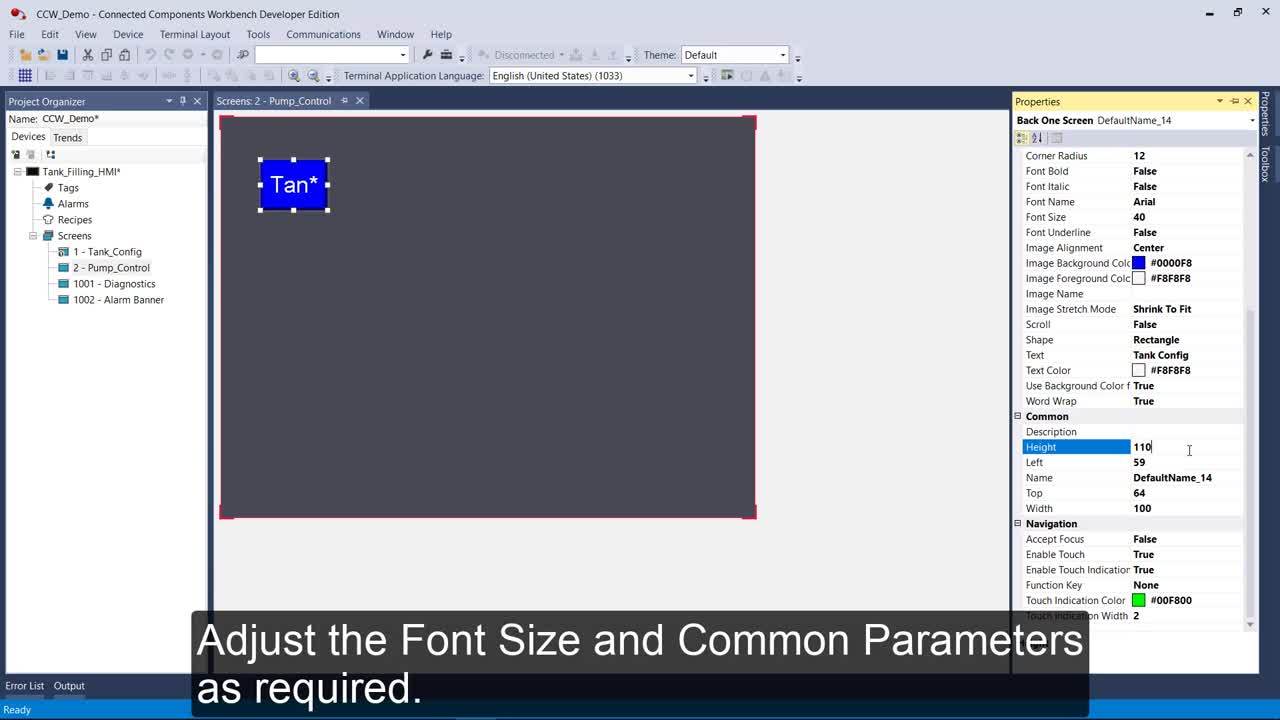

How to set batch size in workbench. Having a batch size of 32 and epochs 100 was how I get the best result and training in rketed model in Keras with 3 hidden layers. In the Bulk API the batch size is how many records are submitted in a file which is termed a batch. Solve_workbench_project --input --single-frame --multi-frame --parameter-sweep --use-license-feature MEBA ANSYS These are the required parameter that have to be selected.

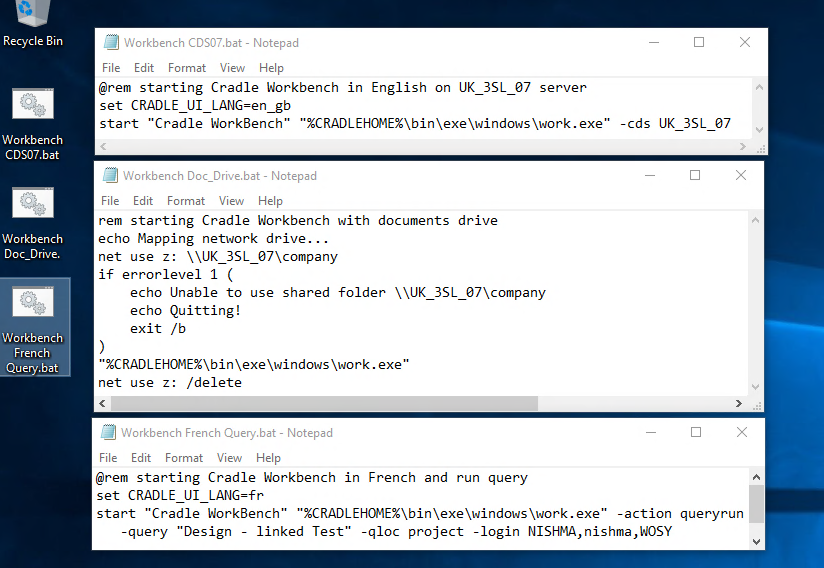

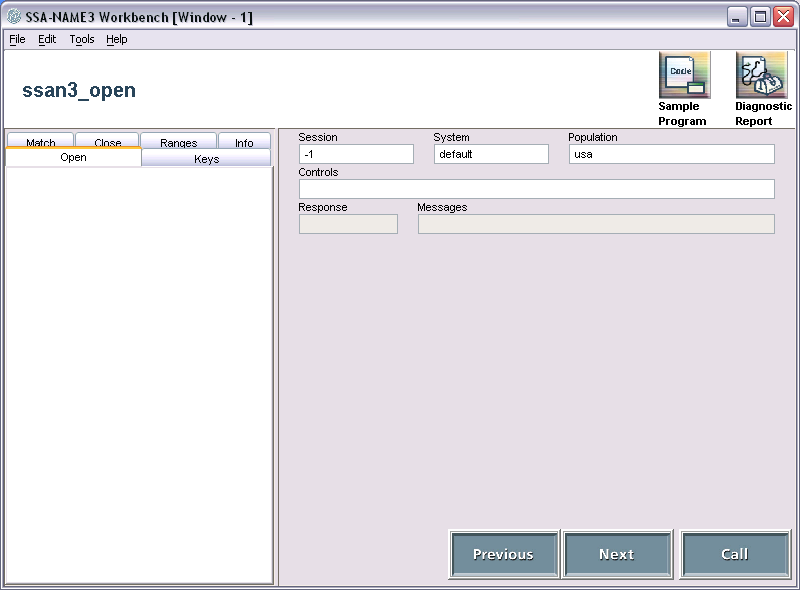

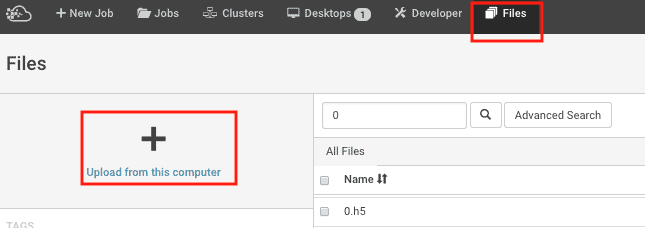

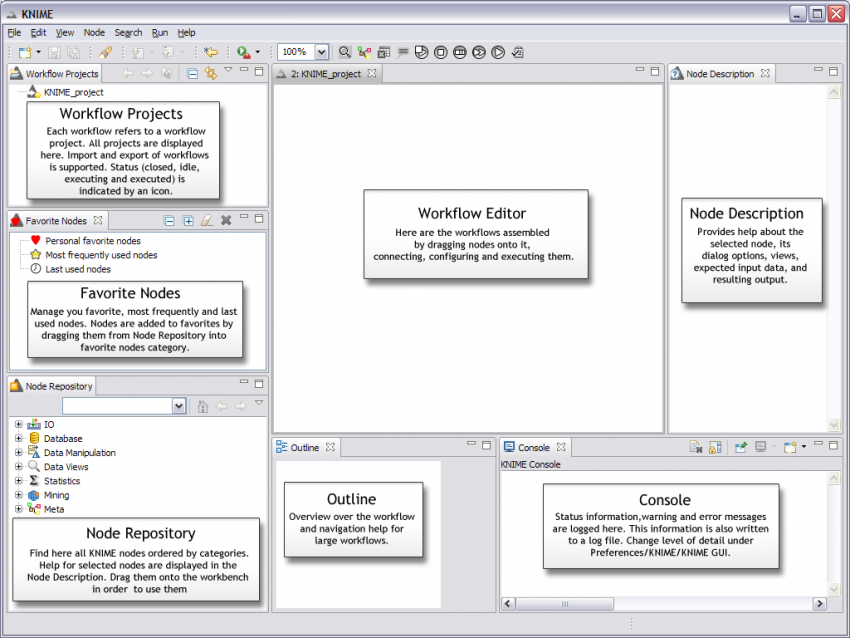

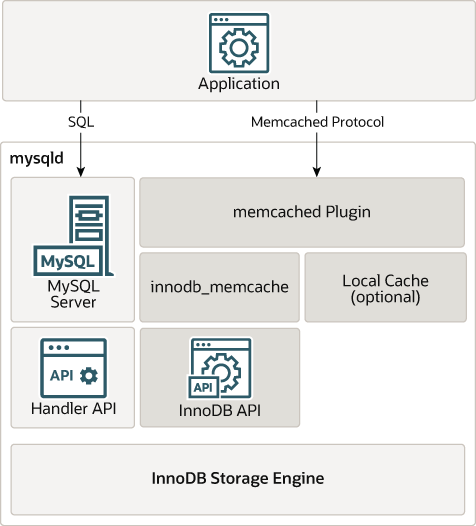

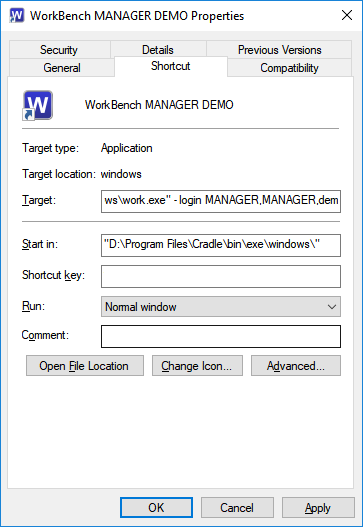

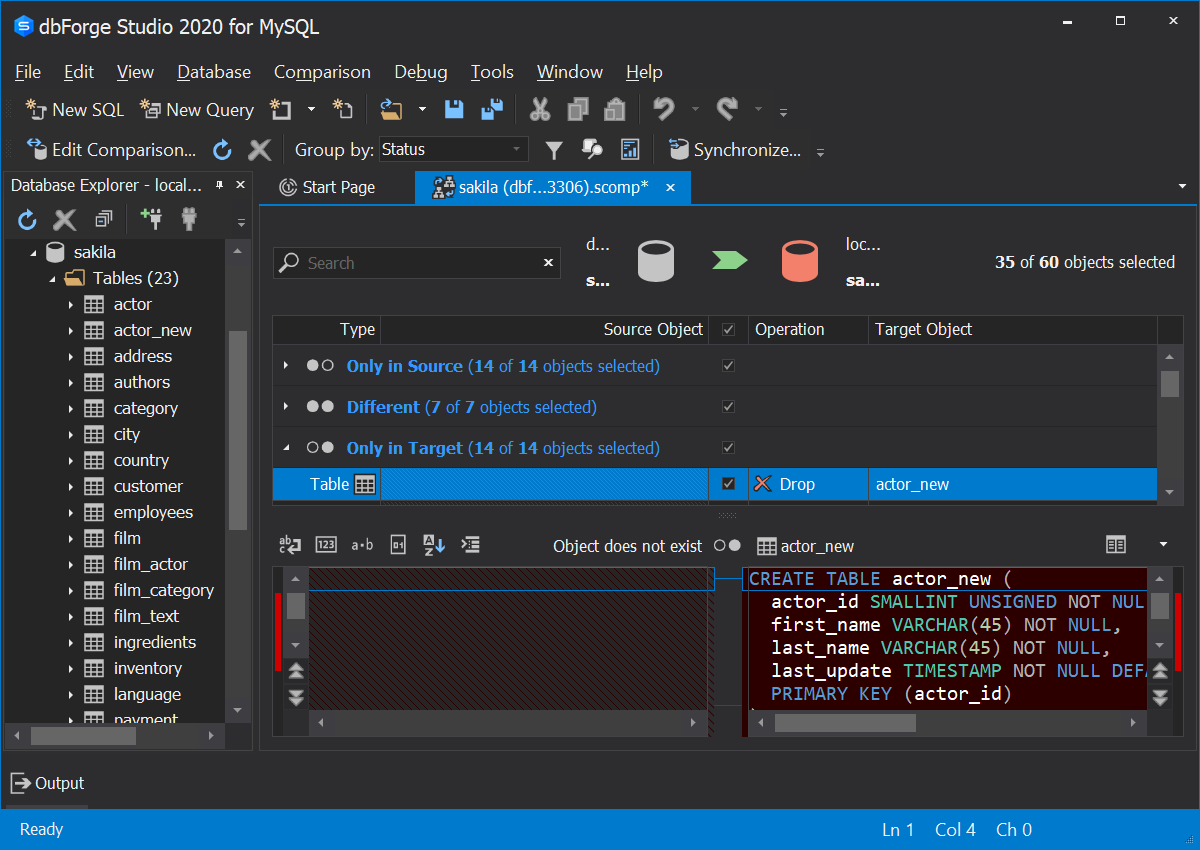

When running SQL WorkbenchJ in batch mode you can define the connection using a profile name or specifying the connection properties directly. The workbench tries to keep the files of the correct size so as to not trip over any batch size limits. Here are the general steps for determining optimal batch size to maximize process capacity.

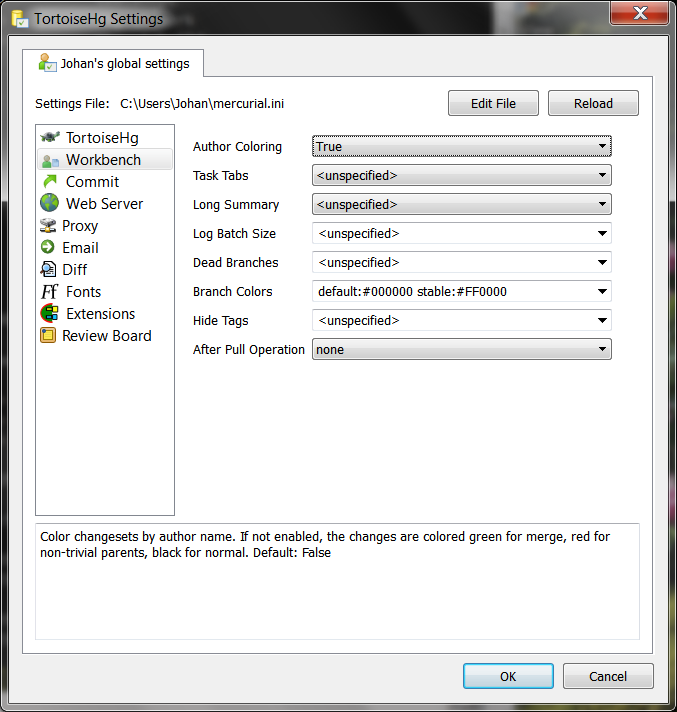

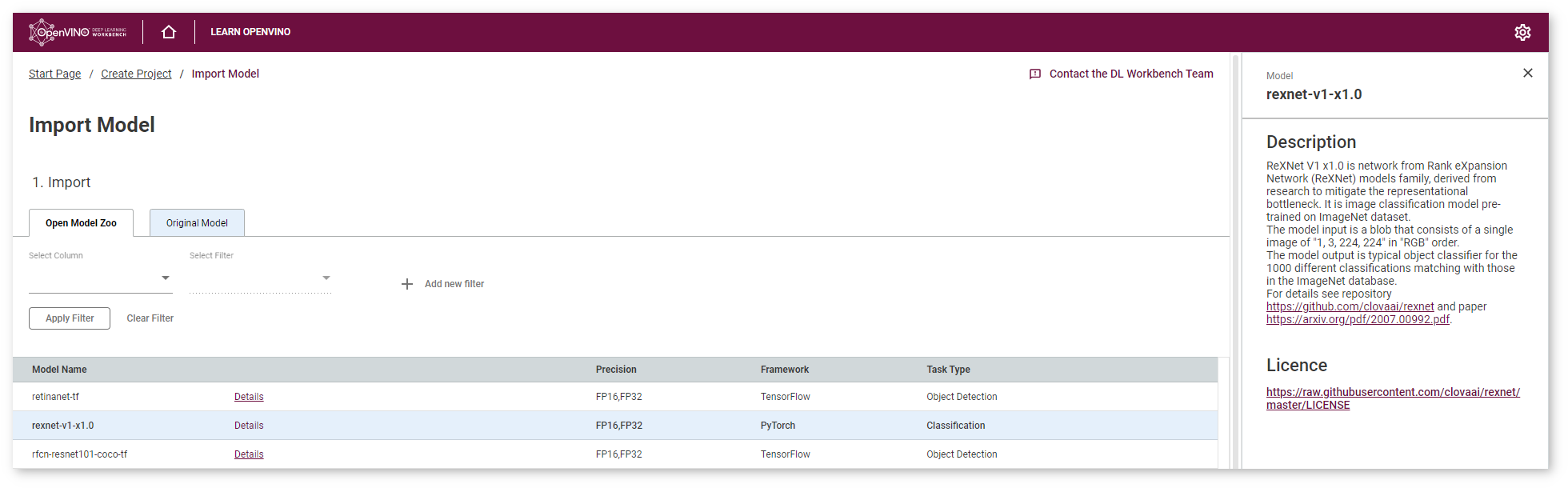

You will size your inventory accordingly and ensure that your pull flow respects your campaign frequencies. To create a new workbench profile follow these steps. This is a featurelimitation of the underlying API.

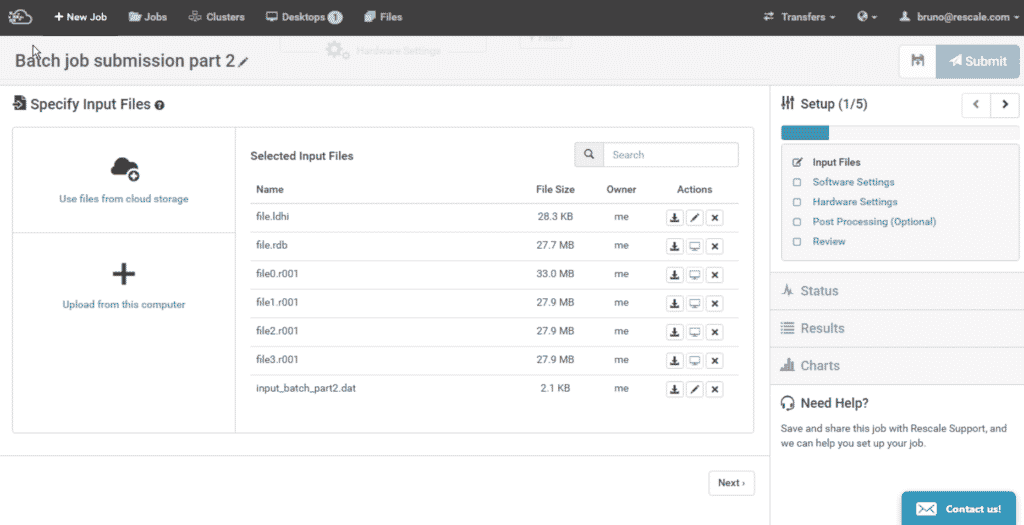

If you have more than a 100 dataset set batch size of 10 and the datasets to 10 or higher. You can use this library to access Bulk API functions. Enter a description for the profile template.

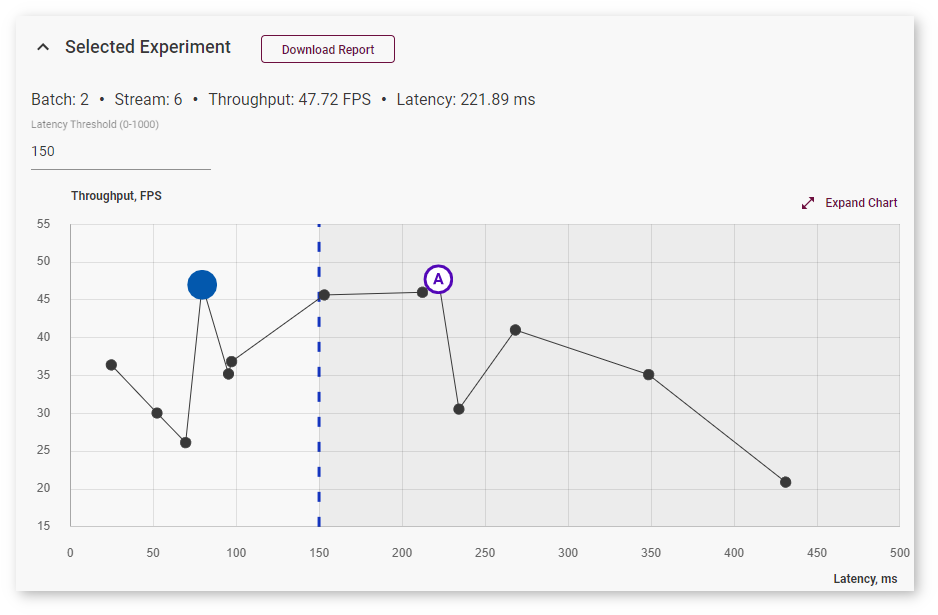

As long as each chunk runs in less than 5 minutes you should be okay. Specifying the script files The script that should be run is specified with the parameter -script Multiple scripts can be specified by separating them with a comma. Translate batch sizes into lead times If your batch size is 10000 and you process 1000 per hour the next batch will wait at least 10 hours before being processed.

Its minimum is 1 resulting in stochastic gradient descent. Specify input Workbench archive wbpz to. The batch is then broken down in to chunks of 100200 records each depending on API version.

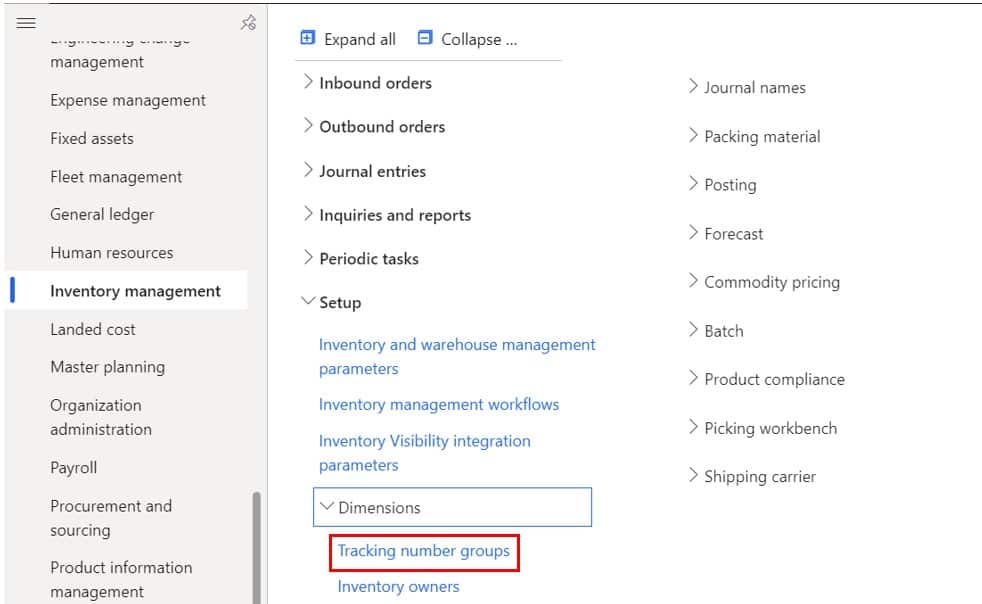

Be sure the scopeSize should less than 200. You can use the DatabaseexecuteBatchsObject className Integer scopeSize method to set the batch size.

Fast but the direction of the.

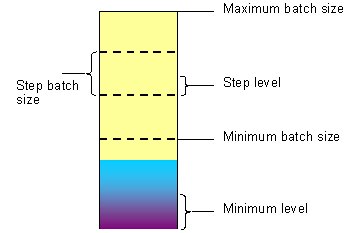

200001 by just reducing the batch size from the current default of 5000. When there is a large setup cost managers have a tendency to increase the batch size in order to spread the setup cost over more units. This would resolve errors like Too many code statements. This was selected as. If you are not able to find that option then you can use the following query. Its maximum is the number of all samples which makes gradient descent accurate the loss will decrease towards the minimum if the learning rate is small enough but iterations are slower. 200001 by just reducing the batch size from the current default of 5000. Be sure the scopeSize should less than 200. Enter a description for the profile template.

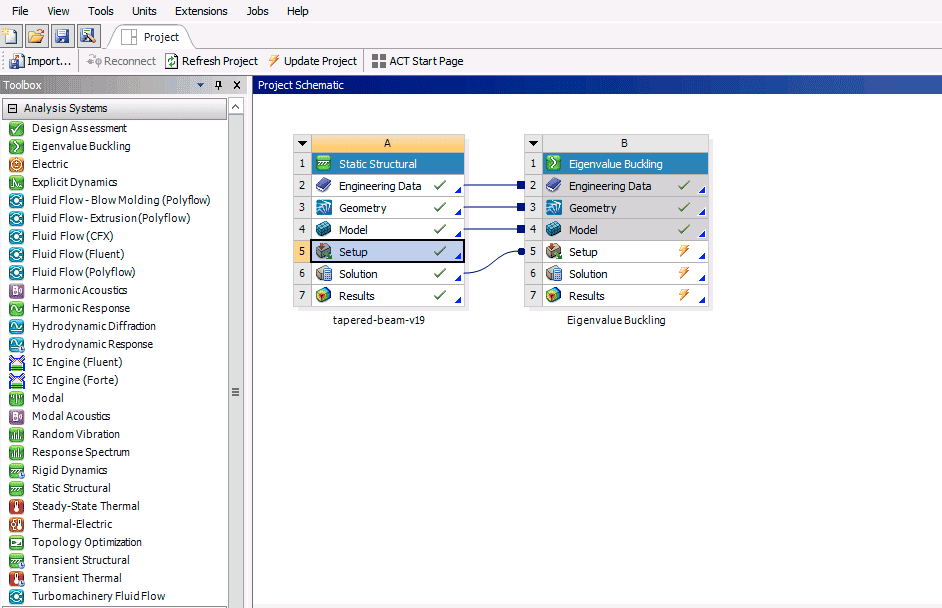

Having a batch size of 32 and epochs 100 was how I get the best result and training in rketed model in Keras with 3 hidden layers. This can be expensive if the additional units produced are not immediately used or sold since they may become obsolete. This is a featurelimitation of the underlying API. In the Bulk API the batch size is how many records are submitted in a file which is termed a batch. Solve_workbench_project --input --single-frame --multi-frame --parameter-sweep --use-license-feature MEBA ANSYS These are the required parameter that have to be selected. This would resolve errors like Too many code statements. The batch is then broken down in to chunks of 100200 records each depending on API version.

Post a Comment for "How To Set Batch Size In Workbench"